Strategic Assessment and Key Problems Emerging from Deepfakes

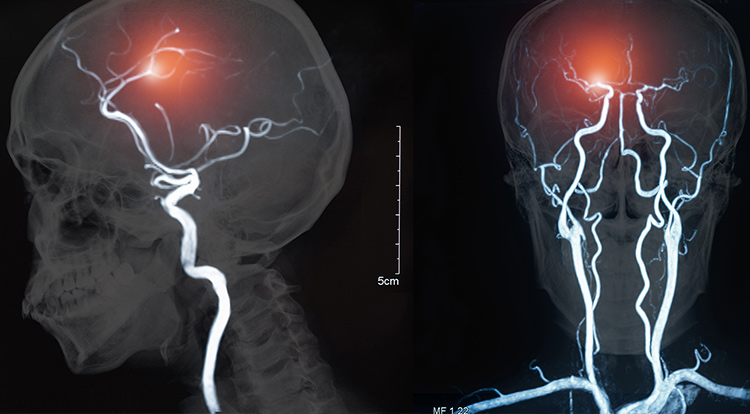

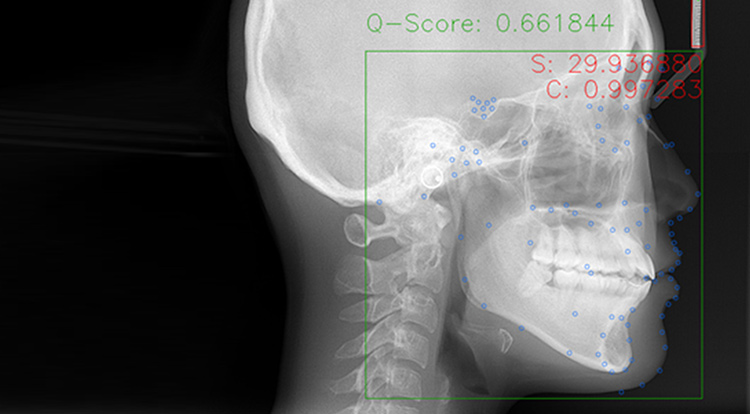

GANs can generate almost entirely new data. Therefore, its application extends the strategic uses of the technology to practically any sector of business and government bodies. Just like any new tech penetrates all types of industries, there are no limits to where it can make a contribution.

One of the most influential GAN uses gaining more and more momentum is image creation. Technologies creating and altering imagery is not a complete novelty. Programs like Adobe Photoshop started offering regular consumer capabilities of creating images from scratch. But even with adequate skills, there were limitations in what they could achieve.

Algorithmically-generated imagination revolutionizes the industry of doctored imagery. This fact becomes even more impressive considering its relative recency. GANs were first introduced in 2014, gaining wider recognition only in 2016-2017.

The main problem around GANs is its ability to spread misinformation with high-quality fake information facts (text, image, video, speech). This issue is not inherent to generative models, but the technology is much more powerful than past primitive tools. History shows that technology itself may be neutral, but such influential tech can be manipulated.

Even though cases of manipulating data might be mishandled, there are mass of useful and, at times, even silly applications. The rule of thumb in such cases is to "do no harm". For instance, there are three rules of robotics – concerned with not injuring humans, following orders, and preserving the robot’s existence. Deep fake technology can adopt some equivalent of that. Also, regulators can focus on specific case uses to frame this around protecting human rights.

By all means, pretending to be someone else is something you shouldn’t do not only from a legal standpoint but social as well. People tend to identify themselves with other people through their feelings: hearing, eyesight, speech. The technology is already at the point of generating fake content capable of entirely deceiving all your senses, and there should be measures put in place.